Developers usually thinks that Windows has a default value for the max number of threads a process can hold. while in fact the limitation is due to the amount of address space each thread have can have.

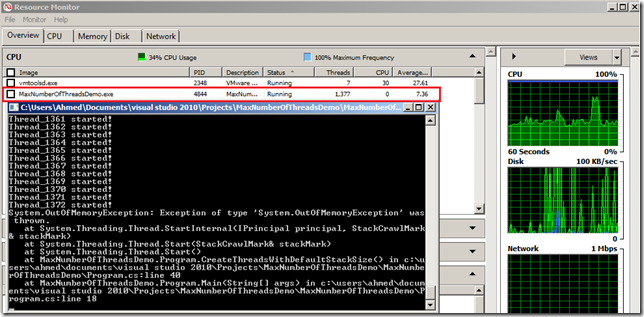

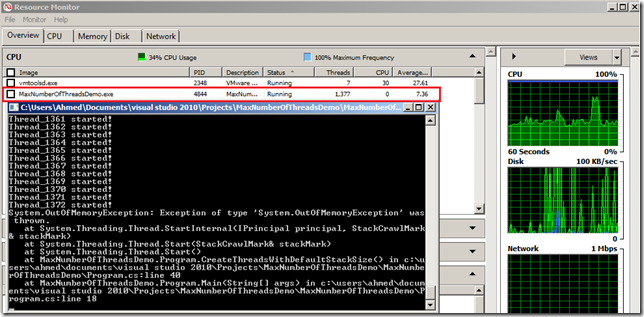

I was working lately on some mass facility simulation applications to test some complex RFID based system. I needed to have a different thread for each person in the facility to simulate parallel and different movement of people at the same time .. and that’s when the fun started  .with the simulation running more than 3000 individuals at the same time, it wasn’t too log before I got the OutOfMemory Unfriendly Exception.

.with the simulation running more than 3000 individuals at the same time, it wasn’t too log before I got the OutOfMemory Unfriendly Exception.

When you create new thread, it has some memory in the kernel mode, some memory in the user mode, plus its stack, the limiting factor is usually the stack size. The secret behind that is the default stack size is 1MB and the user-mode address space assigned to the windows process under 32 bit Windows OS is about 2 GB. that allow around 2000 thread per process (2000 * 1MB = 2GB).

Hit The Limit!

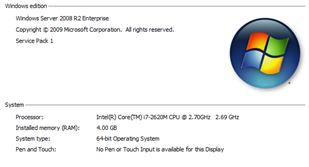

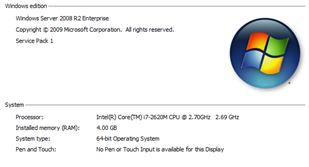

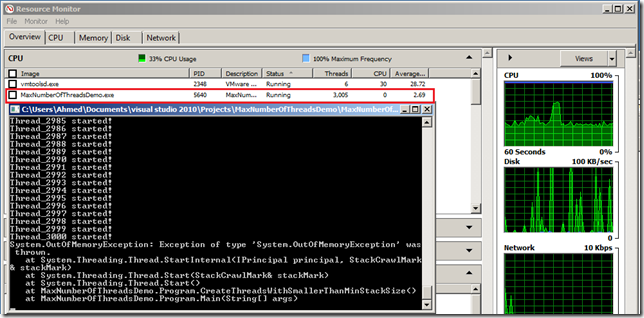

Running the following code on my virtual machine with the specs in the image runs around ~ 1400 Threads, the available memory on the machine also takes place in deciding the Max number of threads can be allocated to your process.

Running the following code on my virtual machine with the specs in the image runs around ~ 1400 Threads, the available memory on the machine also takes place in deciding the Max number of threads can be allocated to your process.

Now running the same application multiple times on different machines with different configs will give a close or similar results.

using System;

using System.Collections.Generic;

using System.Threading;

namespace MaxNumberOfThreadsDemo

{

public class Program

{

private static List<Thread> _threads = new List<Thread>();

public static void Main(string[] args)

{

Console.WriteLine("Creating Threads ...");

try

{

CreateThreadsWithDefaultStackSize();

//CreateThreadsWithSmallerStackSize();

//CreateThreadsWithSmallerThanMinStackSize();

}

catch (Exception ex)

{

Console.WriteLine(ex);

}

Console.ReadLine();

Console.WriteLine("Cleanning up Threads ...");

Cleanup();

Console.WriteLine("Done");

}

public static void CreateThreadsWithDefaultStackSize()

{

for(int i=0; i< 10000; i++)

{

Thread t = new Thread(DoWork);

_threads.Add(t);

t.Name = "Thread_" + i;

t.Start();

Console.WriteLine(string.Format("{0} started!",t.Name));

}

}

private static void Cleanup()

{

foreach (var thread in _threads)

thread.Abort();

_threads.Clear();

_threads = null;

}

private static void DoWork()

{

Thread.Sleep(Int32.MaxValue-1);

}

}

}

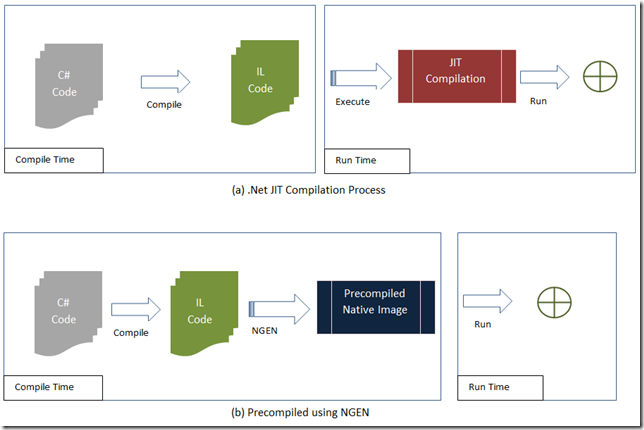

How Can you extend the Limit?

Well, There are several ways to get around that limit, one way is by setting IMAGE_FILE_LARGE_ADDRESS_AWARE variable that will extend the user mode address space assigned to each process to be 3GB on 32x windows or 4GB on 64x.

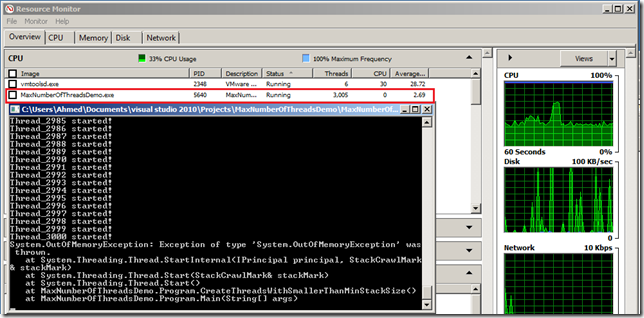

Another way is by reducing the stack size of the created thread which will in return increases the maximum number of threads you can create. You can do that in C# by using the overload constructor when creating a new thread and provide maxStackSize.

Thread t = new Thread(DoWork,256);

Beginning with the .NET Framework version 4, only fully trusted code can set maxStackSize to a value that is greater than the default stack size (1 megabyte). If a larger value is specified for maxStackSize when code is running with partial trust, maxStackSize is ignored and the default stack size is used. No exception is thrown. Code at any trust level can set maxStackSize to a value that is less than the default stack size.

Running the following same code after setting the maxStackSize of the created threads to 256KB will increase the number of max threads created per process.

Now If maxStackSize is less than the minimum stack size, the minimum stack size is used. If maxStackSize is not a multiple of the page size, it is rounded to the next larger multiple of the page size. For example, if you are using the .NET Framework version 2.0 on Microsoft Windows Vista, 256KB (262144 bytes) is the minimum stack size, and the page size is 64KB (65536 bytes). according to MSDN.

In closing, resources aren’t free, and working with multiple threads can be really challenging in terms of reliability, stability, and extensibility of your application. but definitely hitting the max number of threads is something you want to think about carefully and consider maybe only in lab or simulation scenarios.

More Information

Hope this Helps,

Ahmed

.gif)

.gif)