In this post I’ll go through some of the enhancements and improvements done by the CLR team as part of the performance improvements in .Net 4.5. In most cases developers will not have to do anything different to take advantage of the new stuff, it will just works whenever the new framework libraries are used.

Improved Large Object heap Allocator

I’ll start by the most “asked-for” feature from the community – compaction on LOH. As you may know the .Net CLR has a very tough classification regime for its citizens(objects) any object that is greater than or equal to 85.000 bytes considered to be special(large Object) and needs different treatment and placement, that’s why the CLR allocate a special heap for those guys and then the poor guys lives in a different heap frankly called Small Object Heap (SOH).

.gif)

The main difference between these two communities are basically around how much disturbance the CLR imposes in each society. When a collection happen on SOH the CLR clear the unreachable objects and reorganize the objects again (all citizens of the SOH) to compact the space and group all the free space at the beginning of the heap. this decreases the chances of fragmentation in the heap and it can only happen because the citizens of SOH are lighter and easier to move around. on the other hand when collection occur on LOH ( Only occur during Gen 2 Collection) the CLR doesn’t com[pact the memory cause it’s very expensive process to move these large object around.You see where this is leading, now we have to deal with fragmentation issues in LOH and from time to time a Out of Memory Exceptions.

.gif)

Because the LOH is not compacted, memory management happens in a classical way. where the CLR keeps a free list of available blocks of memory. When allocating a large object, the runtime first looks at the free list to see if it will satisfy the allocation request. When the GC discovers adjacent objects that died, it combines the space they used into one free block which can be used for allocation.

Well the CLR team didn’t make the decision yet to compact LOH which is understandable because of the cost of that operation, on the other hand they improved the method by which the CLR manages the free lists, therefore making more effective use of fragments. So now the CLR will revisit the memory fragments “Free Slots” that earlier allocation didn’t use..

Also in Server GC mode, the runtime balances LOH allocations between each heap. Prior to .NET 4.5, we only balanced the SOH.

Many of the citizens of LOH are similar in nature which lends itself to the idea of Object Pools that can essentially reduce the LOH fragmentation.

Background mode for Server GC

Couple of years ago I blogged about a new Background GC in Workstation mode in CLR 4.0 the main idea is while executing Gen 2 collection, CLR checks at well defined points if Gen0/Gen1 has been requested, if true then Gen2 is paused until the lower collection runs to completion then it resumes. Read more about Background mode for Workstation GC.

New CLR supports Background mode for Server GC which introduces support for concurrent collections to server collector. It minimizes long blocking collections while continuing to maintain high application throughput.

In the above schematic diagram notice the following:

- As in .Net 4.0 whenever Gen 0 or Gen 1 happen the managed threads allocating objects on the heap are paused until the collection is done.

- Gen 2 will now pauses on Server mode too (As in Client/Workstation mode) during Gen 0 and Gen 1 giving it priority to finish first.

- Gen 2 as usual runs in the background while the managed threads still allocating objects on the SOH heap.

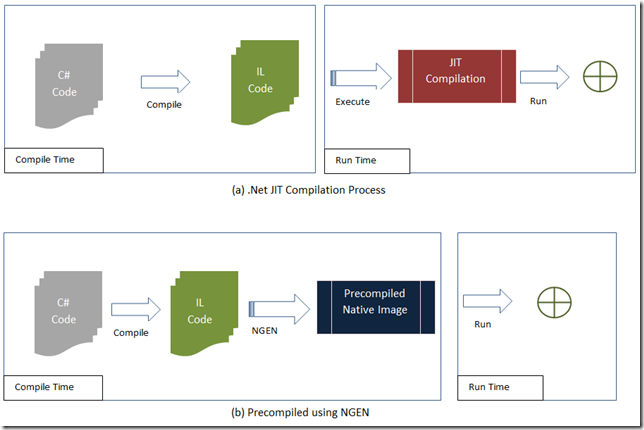

Auto NGEN

.Net Framework prior to v4.5 install both the IL and NGened images for all the assemblies to the dev machines which consumes double the space required or more just for the framework. The CLR team conducted a research to find out what are the most commonly used assemblies out of the framework and named those the “Frequent Set” this set of assemblies only NGened now which saves a lot of space and dramatically decrease the framework disk footprint.

Now the perfectly good question that present itself is What about performance when non-NGened assemblies are used?!

The CLR team introduce a new replacement to the ngen engine called Auto NGen Maintenance Task. When you create a new application uses non-NGened assemblies or it’s not NGened itself here is what happen:

- The user runs the application

- Every time the application run it creates a new type of logs called “Assembly Usage Logs” in the AppData windows directory.

- Now the new Auto NGen Maintenance Task takes advantage of a new feature in Windows 8 called “Automatic Maintenance” where a background efficient performance enhancement jobs can run in the background while the user is no heavily using the machine.

- The Auto NGen Maintenance Task goes through the Logs from all the managed apps the user ran and creates a picture of what assemblies are frequently used by the user and are not NGened and which ones are NGened and not used frequently.

- Then the task NGen these assemblies on fly and removes the NGen images not used (This will be known as Reclaiming Native Images Process).

- The next time the app runs it gain some boost after it uses the NGened images.

Some notes about Auto NGen process

- The assembly must targets the .NET Framework 4.5 Beta or later.

- The Auto NGen runs only on Windows 8

- For Desktop apps the Auto NGen applies only to GAC assemblies

- For Metro styles apps Auto NGen applies to all assemblies

- Auto NGen will not remove not used rooted native images (Images NGened by the developers). Basically the Auto NGen removes only images created by the same process read more about reclaiming native images here.

More Information

- Large Object Heap Uncovered

- OutOfMemoryException and Pinning

- Native Image Service

- Native Image Task

- Creating Native Images

- Reclaiming Native Images

Related Posts

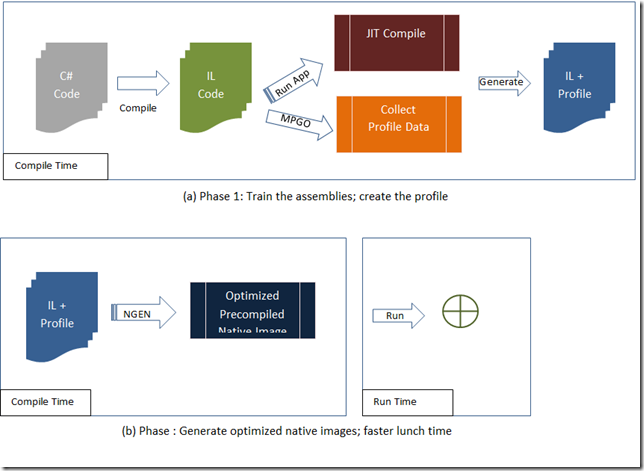

- CLR 4.5: Managed Profile Guided Optimization (MPGO)

- CLR 4.5: Multicore Just-in-Time (JIT)

- CLR 4.0: New Enhancements in the Garbage Collection

Hope this Helps,

Ahmed